In this case study I will discuss the potential for artificial intelligence (AI) to transform education in the Global South, with a particular focus on the continent of Africa. I will start with a description of AI, and the most recent developments in this field. I will then consider the ways in which AI can be used in education. I will consider some of the ethical issues of AI, before conducting a detailed analysis of Global South perspectives, examining the potential for generative AI to meet the needs of UNESCO Sustainable Development Goal 4, and the potential barriers and enablers to this, with a particular focus on the digital divide.

The use of AI in education was first proposed in 1956 by John McCarthy at Dartford College, USA (Zawacki-Richter et al., 2019). Indeed, Doroudi (2023) argues that AI and education are inextricably linked, as early developments in AI were driven by cognitive scientists. Further, intelligent tutoring systems were posited as an aim of AI as early as 1970. During the 1980s, efforts aimed at developing personalised intelligent tutoring systems evolved into adaptive learning systems in the early 2000s. There are several different technologies that are called artificial intelligence. The distinction between generative AI, as exemplified by tools such as ChatGPT and DALL-E, and machine learning should be made clear. Language based Generative AI (genAI) creates text, based on prompts given by the user. It is based on a large language model (LLM), trained on large sets of text scraped from the internet and other historic sources. This produces impressive content, which then can be further refined by conversation and follow up questions. The text produced by the most recent version of ChatGPT (GPT-4) has been found to be indistinguishable from human written content. It has been claimed that this passes the Turing Test, with the responses to behavioural questions indistinguishable from humans (Mei et al., 2024). In contrast, machine learning, a term invented by Arthur Samuel at IBM in 1959, is based on statistical algorithms that enable computers to learn from and make predictions based on data which has been classified. This enables the computer to learn by identifying patterns and making decisions without explicit program instructions. Some example applications of machine learning are the monitoring of crops to provide better yields and in intelligent management systems (Wall et al., 2021).

Easy access to genAI was provided in November 2022 when OpenAI launched ChatGPT. Since then, there has been a rapid uptake of creation and use of LLMs. This has led to widespread concern about the impact of genAI on education (Memarian & Doleck, 2023), and ensuring the validity of written assessments produced outside of exam conditions (Eke, 2023). There have been claims that LLMs can pass key assessments such as the bar exam (Katz et al., 2024) and medical exams (Ali et al., 2024). This in turn has produced a moral panic in the global north with concerns about the impact on validity of assessment, and potential replacement of teachers by AI tutors (Selwyn, 2019).

There is, however, the potential for education to be positively transformed by this technology. Educational technology companies are investing heavily in AI tools to support teachers in their practice and reduce teacher workload (Slagg, 2023). This is a very seductive offer for not only educators but also for educational establishments and governments who want to be perceived as at the cutting edge of technology. For example, in the UK, the government has invested £2 million in Oak Academy to produce AI tools for schools (Department for Education, 2023) and a further £4 million to reduce workload (Department for Education, 2024). This willingness to use AI is found internationally (Schiff, 2021).

The proposal is that these tools may provide support to educators by creating resources such as lesson plans, and materials for formative assessment of students. However, critics argue that this removes teacher engagement with the material. However, there is evidence that such AI assisted tutoring can improve the teaching of low skilled teachers (Wang et al., 2024). Another use of AI in education is to support students in their own work. Tools such as ChatGPT, it is claimed, can provide students with timely feedback on their work, to support their reflection and self-evaluation (Kestin et al., 2024).

Another long-held ambition for AI in education is the development of adaptive learning technologies (Strielkowski, 2024), which tailor educational content to individual student needs, thereby supporting the diverse needs of learners. Using approaches such as learning analytics, these AI-driven tools, can bridge educational gaps and provide educational resources to underserved communities (Kamalov et al., 2023), whilst enabling teachers to focus on interactive and creative teaching rather than administrative tasks (Pedro et al., 2019). AI is therefore being promoted as a transformational force with the ability to reshape education.

However, the ethics of AI, and the differential impact it may have between the global north and the global south, need to be considered. For example, there is evidence of harm coming to people who have been involved in moderating the training data content for LLMs. Moderators have reported that they have been exposed to racist, violent, and sexist material which has impacted their mental health (Rowe, 2023. Some moderators report that they are suffering from post-traumatic stress having dealt with the content. The role of humans in AI are not exclusive to LLMs. Muldoon et al. (2024) highlight that machine learning involves significant data annotation, classifying data that subsequently drives the learning algorithms. The human processes involved are obscured by the perceived functionality of the AI tool. For example, a typical hour-long video will take over 800 hours of human work to annotate for the AI tool. This low-skilled work is typically done in sub-Saharan Africa, with pay of less than two dollars an hour (Tucker, 2024). This outsourcing to the Global South where labour costs are low perpetuates neo-colonial employment practices.

It can be seen, therefore, that AI in terms of its digital colonialism is an “extraction machine” (Muldoon et al., 2024). They make the analogy that with the introduction of the railways into Africa laid the infrastructure to later extract in the 19th century is the same as the introduction in 2009 of fibre optics in preparation for the digital extraction of the continent. LLMs have been trained, at great cost, by private companies in the global north, and therefore there is a need to find ways of monetising the tools (Merchant, 2024). Thus, they are often marketed, with great hype, as solutions for every educator’s problem. Rather than locally driven solutions and technology in the Global South, this option is replaced with the neo-paternalist extractive solutions designed to maximise profit for the companies of the Global North.

However, some reflexivity is needed here. What I have argued is from my perspective, and I’m WEIRD – a Western, Educated, Industrialised, Rich, and Democratic male from the UK (Linxen et al., 2021). My argument so far could be seen as a form of digital colonialism – one where I project my Western-centric paternalism onto my thinking around the issue. As Arora (2024a) states, my pessimism about AI technology is based upon my position of privilege, and the Global South does not have the luxury of pessimism, only optimism about the possibilities of change that can come through the adoption of generative AI. We, in the Global North, need to hear these voices and include them in our arguments. Arora (2024b) states we need to decolonise our approach to understanding AI in the Global South. We need to ask the questions: what does the Global South want from this technology, why, and what are the factors driving this?

Education is a key component of addressing global inequality (Gethin, 2023). Therefore, any tool that can transform education has the potential to improve global equality. Indeed, UNESCO states that AI has the possibility to address inequality and democratise education (Miao et al., 2021). The UNESCO 17 Sustainable development goals (SDG) concern the economic, social and environmental dimensions of sustainable development. All UN member states have committed themselves to working towards these goals by 2030. SDG 4 concerns education, with the aim to “Ensure inclusive and equitable quality education and promote lifelong learning opportunities for all” (The Global Goals, n.d.). Even before LLMs became prevalent, UNESCO had identified the transformative potential of AI in achieving this goal: “AI holds the potentials to … reducing barriers to access education, automating management processes, analysing learning patterns and optimizing learning processes with a view to improving learning outcomes” (UNESCO, 2019). Additionally, the Africa Union also believes AI has the potential to transform education and help the African Continent to achieve SDG4 (Africa Union, 2024). They wish to realise the potential of AI for development by increasing the digital literacy of teachers, so education can make full use of the potential of AI.

AI’s promise to expand and democratise high-quality education should in turn improve skill development, essential for developing a workforce in rapidly changing job markets. This could therefore contribute to socioeconomic progress and achievement of several Sustainable Development Goals. Already, AI is having an impact on language translation, which can help democratise education and produce inclusive growth across borders (Africa Union, 2024).

The swift rise of generative AI tools and its transformative possibilities in both education and other sectors, has caught the global imagination. The technology has a real “wow factor”. Attractive technology is not a new phenomenon. Novel technological solutions to problems, full of promises, are what Ames (2015) calls “charismatic technology”. The Gartner hype cycle evaluates the performance of technology, separating it from hype to inform commercial investment decisions. Currently, genAI has passed the peak of inflated expectations in this cycle (Gartner, 2024), indicating that there is growing scepticism on the effectiveness of genAI. Therefore, AI can be seen as the latest in a long line of attractive, seductive and charismatic technologies. The principal offer is their promise to solve society’s problems. However, as Ames points out, charismatic machines are ultimately conservative and serve to transmit the status quo or continue a form of technological colonialism (Ames, 2019).

A previous example of a “charisma machine” in education is the One Laptop Per Child project. This project, started by Nicholas Negroponte at MIT, intended to provide ruggedised laptops costing only $100. The laptops were designed to be low-power devices, that used an open-source operating system, with built-in educational software. It was intended that these laptops would be bought by governments and distributed to children throughout the developing world, which would drive discovery learning. Similarly, Sugata Mitra’s Hole in the Wall project provided free access to computers in walls in public spaces. The computers were installed at child height, to be accessible by children and had internet access. It was envisaged that children would self-learn by what Mitra called “minimally invasive education” (Mitra & Rana, 2001). Both utopian ideas assumed that if technology is provided, children will use it to teach themselves anything, from reading to engineering. However, in both cases, this did not happen. Mostly, the machines broke. The infrastructure was not in place to maintain the required internet and computer systems to continue functioning. Hole in the Wall computers were often dominated by older children playing games or viewing inappropriate content (Arora, 2010). In one advertisement for One Laptop Per Child project, the screen was used as a source of illumination at night in a village without electricity. However, the project failed to consider many practicalities, including how the laptop could be recharged without the relevant infrastructure to support it (Ames, 2019). The critical question is whether generative AI will function as a charisma machine, potentially falling short of the high expectations assigned to it.

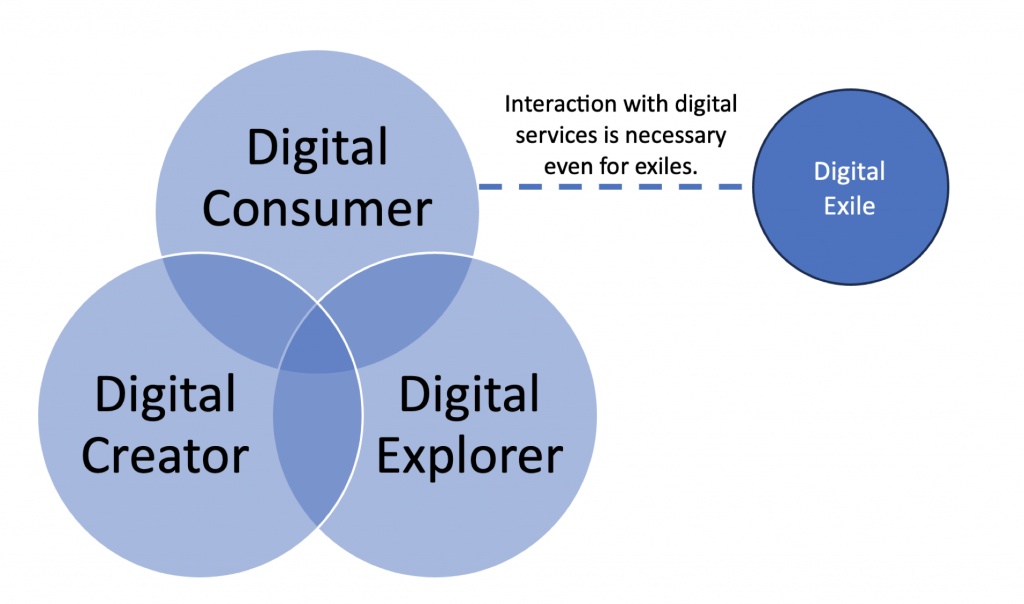

One way in which AI will fail to meet its potential to improve educational outcomes is if it increases inequality. Giannini (2023), writing for UNESCO, highlights that digital technology has a record of exacerbating inequality. Therefore, if it is to be successful, the implementation of AI in education must be conducted with the aim of closing the digital divide. The digital divide is the notion of the ‘haves’ and ‘have-nots’ with unequal access to digital tools, digital services, education, and the relevant skills needed to navigate a digital landscape. Digital inequality can be categorised through a framework of access. Van Dijk (2020) posits this access is defined as motivational, material, skills, and usage. Motivational access is where there is the motivation to use the technology; material is the possession of computers and internet access, including systems, data and power infrastructure; skills are the actual digital skills that the individual possesses allowing them to use digital devices, also known as digital literacy; and usage is the availability of sufficient time and applications.

This framework can be used to understand the challenges of employing AI in education in the global south, and whether the digital divide is a barrier to effective implementation of AI assisted education. For example, examining previous educational technology solutions through these lenses, it can be seen why initiatives such as One Laptop Per Child ultimately failed. In that case, issues around the robustness of the hardware and internet connectivity stifled the success of the project, with limited access to power and internet connectivity. This is a demonstration of the lack of material access. The domination of the Hole in the Wall computers by older children is example of failure of usage – the target population (younger children) were prevented from having sufficient opportunity to access the computers.

Let us first consider the motivation to use AI in education in the global south. As outlined above, there has been widespread interest in the application of AI in education at a policy level by UNESCO, Africa Union, and other governmental level organisations. Limited research conducted so far demonstrates that there is motivation by educators to use AI (e.g., Khlaif et al., 2024, Kasun et al., 2024). For example, a study examined Nigerian pre-service teachers intentions to use AI in teaching genetics and found that intention to use the tool was predicted by perceived usefulness, aligning with the technology acceptance model (Adelana et al., 2024). For learners, AI can help with structuring essays, improving accessibility or even creating a DALL-E generated interactive learning metaverse (Rospigliosi, 2023). Since the COVID-19 pandemic, which disrupted formal education globally, youth in the global south have been using online tools such as YouTube, TikTok and Instagram to learn skills and develop and share their knowledge, rejecting traditional online learning. This suggests an openness to innovation and ‘global north’ tools (Arora, 2024b). Academic research similarly finds that the human-like qualities of AI chatbots improved Iranian students’ motivation to learn English (Ebadi et al., 2022). Thus, there seems to be widespread motivation to use AI tools in education in the global south.

Next, consider the material access. As previously described, material access includes the physical devices that individuals interact with, as well as the physical infrastructure allowing connection to both power sources and data, including the internet. There have been dramatic improvements recently in data connectivity, with Facebook investing in subsea cables to connect 16 African countries to the internet (Ahmad & Salvadori, 2022), and proving 4G, 5G and broadband access to millions of people on the African continent. This will increase both the capacity and the reliability of internet connections. In contrast to the failure of the One Laptop per Child project, this development makes the implementation of AI within the African continent more possible. However, power is still a major challenge within Africa. Around half the population have no electricity at home. African governments are calling for support from the Global North via the COP climate summits, recognising the potential for renewable energy generation to support growth in power provision (Payton, 2024). Mitigating these weakness in large scale power infrastructure, there is some hope given that computer devices are increasing in their efficiency. The internet is accessed primarily on low-powered, android-based mobile phones which can be easily recharged on low-cost solar devices. This substantially reduces the material barriers to access AI for educational purposes.

Next, consider the individual digital skills. As mentioned, when considering the motivation to use AI, there are growing digital skills in the younger population, with widespread engagement with technology such as TikTok and YouTube to develop skills. Many young people are also sharing their skills, as creators, monetising and influencing. However, while the digital skills necessary for content generation are quite advanced, consuming content requires less expertise. Therefore, when considering the use of AI in education, there may be a digital skills gap that needs to be addressed. For example, one student may be comfortable using AI on a computer to help construct writing frames for assessment development, whilst another may only be able to use a computer to access social media to watch content. While genAI has a “low barrier” to entry, with a natural language interface, a growing body of research shows that the skill of prompt engineering is required to get the most out of the system (Schulhoff et al., 2024).

Critical evaluation skills must be developed for effective use of AI-generated content. Generative AI does not actually know anything. It is a prediction machine whose output is based on the probability of the next token (a word, or part of a word) being the most likely. It cannot be relied upon for facts. Studies have shown that the output is often wrong as generative AI “hallucinates” (Ji et al., 2023), inventing facts with convincing confidence. It cannot be reliably used for technical education or subjects that rely on technical or regulatory data (Birtill & Birtill, 2024), as it is unable to apply contextual information, which may vary by international setting, effectively. It is also biased. This is because the datasets that Large Language Models (LLMs) are trained on contain biases. These biases reflect the biases on the internet in general (Navigil et al., 2023). For example, Reddit was included in the training data of ChatGPT, which can be an unreliable source as anyone can post to it with their biases (Ravi & Vela, 2024). Additionally, relevant for education, some LLMs have been trained to censor content. For example, ChatGPT flagged a request for material about genocide studies as inappropriate content. It refused to analyse German military documents from 1939, while being able to analyse similarly dated allied documents (Waddington, 2024). Therefore, critical evaluation skills are needed to judge the appropriateness of the generated output.

Finally, consider usage – the degree to which different groups use AI. There is already evidence in the global north of gender differences in usage of AI (Carvajal et al., 2024), with female students being more likely to opt out of its use. While there remain participation gaps in the global south, many of these are driven by the factors discussed above (Udisha & Ambily Philomina 2024). When skills, material access, and motivation are addressed, AI will be more able to support educational needs.

Considering the access framework outlined above, and the analysis of AI in education through this perspective, it appears that AI in education has the potential to succeed. Indeed, early research trials are beginning to emerge that demonstrate the success of AI-supported educational tools. Henkel et al., (2024) in a preprint article describe a trial that took place in Ghana. Rori, an AI-powered maths tutor was made available to students via WhatsApp on a mobile device for only one hour a week. These students showed a substantial improvement in maths, compared to student who did not have access to this tool, but had all other experiences the same. This unstructured access to the AI tool demonstrates the possibility of children engaging with AI as a way of learning that does not rely on direct instruction, aligning with the Vygotskian constructivist approach (Sidorkin, 2024).

The Global South views AI as transformative, aligning with the optimism of big tech in the Global North. However, this is at odds with the extractive, colonial, and unreliable nature of the technology. Algorithmic colonisation is driven by the corporate agenda of companies such as Meta (Facebook, Instagram, WhatsApp etc.) and OpenAI. This colonialism is different to the colonialism of the past that was spatial; however, it is still the same extractive model where those of the Global South may be exploited for the profits of the Global North. In addition, this colonisation still impacts the workers of the Global South, imprisoning the workers and making them dependent on the infrastructure and technology of the Global North. Again, the charisma is sold as a solution to the problems of the South; however, as Birhane (2020) states, Western developed AI solutions may not be suitable for the Global South, may impede the development of local solutions that take account of the specific needs of the Global South.

The problems of technology must be balanced against their perceived usefulness. Allman (2022) points out that digital tools have become woven into society, and access to them should be seen as a right. There is a desire for the use of AI technology in the Global South as a solution to problems of education and economic development. The risks of depending on AI technology to solve deep-rooted institutional, societal and economic problems is high, this along with the human cost and the environmental impact. This could paradoxically lead to greater digital inequality as the resources used for AI generation, whether that be power, data or human capital, could lead to expansion of the North-South digital divide and thereby increasing the socio-economic, developmental and educational problems that the Global South is wishing to solve with AI technology. The rapid expansion of digital tools, and especially AI tools, mirrors classic colonialism. Like in the past when Western countries exploited the Global South, extracting resources for profit in the North by building technical infrastructure to do so. The charismatic, seductive technology of the railways in the late 19th century has been replaced by the technology infrastructure of the 21st century.

If the promise of AI in education that is sold by its proponents – one of automation, liberation and societal development – is to be achieved, then there is required a substantial critical revaluation of the relationship between the companies who own the tools and the workers who train them. New models and relationships need to be developed between the owners of the technology in the North and the trainers in the Global South, along with robust critical and ethical frameworks to ensure that there are fair labour practices together with fair development of AI solutions that benefit the Global South rather than colonise them. Indeed, the Africa Union has stated that they wish to create Africa owned specific AI tools which will be able to address the unique challenges in African education such as linguistic barriers or access to education (Africa Union, 2024).

Our understanding of AI adoption in the Global South needs significant reframing. The narrative must shift from one of technological determinism and Western-centric edtech solutions to one that takes account of local contexts and needs. This requires acknowledging that communities in the Global South are not passive recipients of technology but active agents in shaping how AI can serve their specific needs and development goals. Without stringent ethical frameworks and safeguards around the deployment, training, human costs and use of AI, it is likely that the charismatic extraction machine will continue to increase inequality in the people who pay the largest cost for this technology. This technology must be more than just “mathematical snake oil” (Birhane, 2020). If the wishes of UNESCO, the African Union and others in the Global South are to be met and the optimism of youth fulfilled, then this is an urgent reassessment that needs to take place to ensure that the technological benefits can be harnessed for the benefit of all.

References

Adelana, O. P., Ayanwale, M. A., & Sanusi, I. T. (2024). Exploring pre-service biology teachers’ intention to teach genetics using an AI intelligent tutoring-based system. Cogent Education, 11(1), https://doi.org/10.1080/2331186X.2024.2310976

Africa Union. (2024). Continental Artificial Intelligence Strategy: Harnessing AI for Africa’s Development and Prosperity. Africa Union. https://au.int/en/documents/20240809/continental-artificial-intelligence-strategy

Ahmad, N. & Salvadori, K. (2020, May 13). Building a transformative subsea cable to better connect Africa. Engineering at Meta. https://engineering.fb.com/2020/05/13/connectivity/2africa/

Ali, R., Tang, O. Y., Connolly, I. D., Zadnik Sullivan, P. L., Shin, J. H., Fridley, J. S., … & Telfeian, A. E. (2023). Performance of ChatGPT and GPT-4 on neurosurgery written board examinations. Neurosurgery, 93(6), 1353-1365. https://doi.org/10.1101/2023.03.25.23287743

Allmann, K. (2022). UK digital poverty evidence review 2022. London: Digital Poverty Alliance. https://digitalpovertyalliance.org/uk-digital-poverty-evidence-review-2022/

Ames, M. G. (2015, August). Charismatic technology. In Proceedings of the fifth decennial Aarhus Conference on Critical Alternatives (pp. 109-120). https://doi.org/10.7146/aahcc.v1i1.21199

Ames, M. G. (2019). The charisma machine: The life, death, and legacy of one laptop per child. Mit Press.

Arora, P. (2010). Hope‐in‐the‐Wall? A digital promise for free learning. British Journal of Educational Technology, 41(5), 689-702. https://doi.org/10.1111/j.1467-8535.2010.01078.x

Arora, P. (2024a). The privilege of pessimism: The politics of despair towards the digital and the moral imperative to hope. Dialogues on Digital Society, 0(0). https://doi.org/10.1177/29768640241252103

Arora, P. (2024b). Creative data justice: a decolonial and indigenous framework to assess creativity and artificial intelligence. Information, Communication & Society, 1-17. https://doi.org/10.1080/1369118X.2024.2420041

Birhane, A. (2020). Algorithmic colonization of Africa. SCRIPTed, 17, 389. http://dx.doi.org/10.2966/scrip.170220.389.

Birtill, M., & Birtill, P. (2024). Implementation and Evaluation of Genai-Aided Tools in a UK Further Education College. In Artificial Intelligence Applications in Higher Education (pp. 195-214). Routledge. http://dx.doi.org/10.4324/9781003440178-12

Carvajal, D., Franco, C., & Isaksson, S. (2024). Will Artificial Intelligence Get in the Way of Achieving Gender Equality?. NHH Dept. of Economics Discussion Paper, (03). http://dx.doi.org/10.2139/ssrn.4759218

Department for Education. (2023, October 30). New support for teachers powered by Artificial Intelligence [Press release]. https://www.gov.uk/government/news/new-support-for-teachers-powered-by-artificial-intelligence

Department for Education. (2024, August 28). Teachers to get more trustworthy AI tech, helping them mark homework and save time [Press release].https://www.gov.uk/government/news/teachers-to-get-more-trustworthy-ai-tech-as-generative-tools-learn-from-new-bank-of-lesson-plans-and-curriculums-helping-them-mark-homework-and-save

Doroudi, S. (2023). The intertwined histories of artificial intelligence and education. International Journal of Artificial Intelligence in Education, 33(4), 885-928. https://doi.org/10.1007/s40593-022-00313-2

Ebadi, S., & Amini, A. (2022). Examining the roles of social presence and human-likeness on Iranian EFL learners’ motivation using artificial intelligence technology: A case of CSIEC chatbot. Interactive Learning Environments, 32(2), 655-673. https://doi.org/10.1080/10494820.2022.2096638

Eke, D. O. (2023). ChatGPT and the rise of generative AI: Threat to academic integrity?. Journal of Responsible Technology, 13. https://doi.org/10.1016/j.jrt.2023.100060

Henkel, O., Horne-Robinson, H., Kozhakhmetova, N., & Lee, A. (2024). Effective and Scalable Math Support: Evidence on the Impact of an AI-Tutor on Math Achievement in Ghana. arXiv preprint. https://doi.org/10.48550/arXiv.2402.09809

Gartner. (2024, August 21). Gartner 2024 Hype Cycle for Emerging Technologies Highlights Developer Productivity, Total Experience, AI and Security. [Press release]. https://www.gartner.com/en/newsroom/press-releases/2024-08-21-gartner-2024-hype-cycle-for-emerging-technologies-highlights-developer-productivity-total-experience-ai-and-security

Gethin, A. (2023). Distributional Growth Accounting: Education and the Reduction of Global Poverty, 1980-2022. World Inequality Lab

Giannini, S. (2023). Generative AI and the future of education. UNESCO. https://doi.org/10.54675/HOXG8740

Ji, Z., Lee, N., Frieske, R., Yu, T., Su, D., Xu, Y., … & Fung, P. (2023). Survey of hallucination in natural language generation. ACM Computing Surveys, 55(12), 1-38. https://doi.org/10.1145/3571730

Kamalov, F., Santandreu Calonge, D., & Gurrib, I. (2023). New era of artificial intelligence in education: Towards a sustainable multifaceted revolution. Sustainability, 15(16), https://doi.org/10.3390/su151612451

Kasun, G. S., Liao, Y. C., Margulieux, L. E., & Woodall, M. (2024). Unexpected outcomes from an AI education course among education faculty: Toward making AI accessible with marginalized youth in urban Mexico. In Frontiers in Education, 9(13), https://doi.org/10.3389/feduc.2024.1368604

Katz, D. M., Bommarito, M. J., Gao, S., & Arredondo, P. (2024). Gpt-4 passes the bar exam. Philosophical Transactions of the Royal Society A, 382(2270), https://doi.org/10.1098/rsta.2023.0254

Kestin, G., Miller, K., Klales, A., Milbourne, T., & Ponti, G. (2024). AI Tutoring Outperforms Active Learning. Preprint. https://doi.org/10.21203/rs.3.rs-4243877/v1

Khlaif, Z. N., Ayyoub, A., Hamamra, B., Bensalem, E., Mitwally, M. A., Ayyoub, A., … & Shadid, F. (2024). University Teachers’ Views on the Adoption and Integration of Generative AI Tools for Student Assessment in Higher Education. Education Sciences, 14(10). https://doi.org/10.3390/educsci14101090

Linxen, S., Sturm, C., Brühlmann, F., Cassau, V., Opwis, K., & Reinecke, K. (2021). How weird is CHI?. In Proceedings of the 2021 chi conference on human factors in computing systems (pp. 1-14). https://doi.org/10.1145/3411764.3445488

Mei, Q., Xie, Y., Yuan, W., & Jackson, M. O. (2024). A Turing test of whether AI chatbots are behaviorally similar to humans. Proceedings of the National Academy of Sciences, 121(9), e2313925121. https://doi.org/10.1073/pnas.2313925121

Miao, F., Holmes, W., Huang, R., & Zhang, H. (2021). AI and education: A guidance for policymakers. Unesco.

Mitra, S., & Rana, V. (2001). Children and the Internet: Experiments with minimally invasive education in India. British Journal of Educational Technology, 32 (2), 221-232. https://doi.org/10.1111/1467-8535.00192

Memarian, B., & Doleck, T. (2023). ChatGPT in education: Methods, potentials and limitations. Computers in Human Behavior: Artificial Humans, 100022. https://doi.org/10.1016/j.chbah.2023.100022

Merchant, B. (2024). AI generated business: The rise of AGI and the rush to find a working revenue model. AI Now Institute. Retrieved December 5, 2024, from https://ainowinstitute.org/general/ai-generated-business

Muldoon, J., Graham, M., & Cant, C. (2024). Feeding the machine: the hidden human labour powering AI. Canongate Books.

Navigli, R., Conia, S., & Ross, B. (2023). Biases in large language models: origins, inventory, and discussion. ACM Journal of Data and Information Quality, 15(2), 1-21. https://doi.org/10.1145/3597307

Payton, B. (2024, October 18). Can Africa hit the accelerator on renewables? African Business. https://african.business/2024/10/energy-resources/can-africa-hit-the-accelerator-on-renewables

Pedro, F., Subosa, M., Rivas, A., & Valverde, P. (2019). Artificial intelligence in education: Challenges and opportunities for sustainable development. UNESCO.

Ravi, K., & Vela, A. E. (2024). Comprehensive dataset of user-submitted articles with ideological and extreme bias from Reddit. Data in Brief, 56, 110849. https://doi.org/10.1016/j.dib.2024.110849

Rospigliosi, P. A. (2023). Artificial intelligence in teaching and learning: What questions should we ask of ChatGPT? Interactive Learning Environments, 31(1), 1-3. https://doi.org/10.1080/10494820.2023.2180191

Rowe, N. (2023, August 2). ‘It’s destroyed me completely’: Kenyan moderators decry toll of training of AI models. The Guardian. https://www.theguardian.com/technology/2023/aug/02/ai-chatbot-training-human-toll-content-moderator-meta-openai

Schulhoff, S., Ilie, M., Balepur, N., Kahadze, K., Liu, A., Si, C., … & Resnik, P. (2024). The Prompt Report: A Systematic Survey of Prompting Techniques. arXiv preprint https://doi.org/10.48550/arXiv.2406.06608

Schiff, D. (2022). Education for AI, not AI for education: The role of education and ethics in national AI policy strategies. International Journal of Artificial Intelligence in Education, 32(3), 527-563. https://doi.org/10.1007/s40593-021-00270-2

Selwyn, N. (2019). Should robots replace teachers?: AI and the future of education. John Wiley & Sons.

Sidorkin, A. M. (2024). Artificial intelligence: Why is it our problem? Educational Philosophy and Theory, 1–6. https://doi.org/10.1080/00131857.2024.2348810

Slagg, A. (2023, November 14). AI for Teachers: Defeating Burnout and Boosting Productivity. EdTech Magazine. https://edtechmagazine.com/k12/article/2023/11/ai-for-teachers-defeating-burnout-boosting-productivity-perfcon

Strielkowski, W., Grebennikova, V., Lisovskiy, A., Rakhimova, G., & Vasileva, T. (2024). AI‐driven adaptive learning for sustainable educational transformation. Sustainable Development. https://doi.org/10.1002/sd.3221

The Global Goals. (n.d.). 4 Quality Education. Retrieved December 1, 2024, from https://www.globalgoals.org/goals/4-quality-education/?gad_source=1&gclid=Cj0KCQiA3sq6BhD2ARIsAJ8MRwWcfHxh4hBRi-MwyrMdU7HsFj8oTkS2GroXqtlw-XfnpQ17sTlYWt0aApsrEALw_wcB

Tucker, I. (2024, July 6). James Muldoon, Mark Graham and Callum Cant: ‘AI feeds off the work of human beings.’ The Observer. https://www.theguardian.com/technology/article/2024/jul/06/james-muldoon-mark-graham-callum-cant-ai-artificial-intelligence-human-work-exploitation-fairwork-feeding-machine

Udisha, O., & Ambily Philomina, I. G. (2024). Bridging the Digital Divide: Empowering Rural Women Farmers Through Mobile Technology in Kerala. Sustainability, 16(21), 9188. https://doi.org/10.3390/su16219188

UNESCO. (2019). Exploring the potential of artificial intelligence to accelerate the progress towards SDG 4 -Education 2030. UNESCO Executive Board, 206th, 2019 [587]

Van Dijk, J. (2020). The digital divide. John Wiley & Sons.

Waddington, L. (2024), November 25). Navigating Academic Integrity in the Age of GenAI: A Historian’s Perspective on Censorship. International Center for Academic Integrity. https://academicintegrity.org/resources/blog/536-navigating-academic-integrity-in-the-age-of-genai-a-historian-s-perspective-on-censorship

Wall, P. J., Saxena, D., & Brown, S. (2021). Artificial intelligence in the Global South (AI4D): Potential and risks. arXiv preprint arXiv:2108.10093. https://doi.org/10.48550/arXiv.2108.10093

Wang, R. E., Ribeiro, A. T., Robinson, C. D., Loeb, S., & Demszky, D. (2024). Tutor copilot: A human-ai approach for scaling real-time expertise. arXiv preprint https://doi.org/10.48550/arXiv.2410.03017

Zawacki-Richter, O., Marín, V. I., Bond, M., Gouverneur, F., & Hunger, I. (2019). Systematic review of research on artificial intelligence applications in higher education—where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. https://doi.org/10.1186/s41239-019-0171-0